The local file system in Databricks is known as the DBFS. This article explains the underlying concepts of DBFS for Databricks beginners and people who are new to cloud storage.

Once you’ve grasped the concepts, the article shows you how to:

- Access and browse the DBFS with the web interface.

- List DBFS files from a Notebook.

- List DBFS files using the REST API.

- List DBFS files using the CLI.

Table of Contents

What Is Databricks DBFS?

Even if you’re new to the Cloud, you will be familiar with the local file system on your personal laptop or desktop computer. The visual layout organizes your files into folders and sub-folders.

The underlying storage system in your computer hardware may be structured differently (e.g. pointers and addresses). But a file system gives you a simplified way of navigating and accessing what you need.

“DBFS” is an acronym for Databricks File System. It is a simplified layer that provides a single layout to any form of cloud storage that Databricks supports.

For example, if you’re not used to working with AWS S3 buckets, then you’ve been spared the weird (distributed) way that the AWS file system represents a hierarchy of data objects.

With DBFS between you and your chosen type of cloud storage, you don’t have to worry about the complexities of distributed systems. DBFS looks like the kind of local file system you are used to.

That’s called “unified access to an abstraction layer”.

How DBFS Relates To Other Files Systems

There are two primary types of file systems: distributed file systems and local file systems.

Distributed File Systems

These are file systems that allow access to files from multiple hosts sharing via a computer network. This is the realm of systems like:

- Hadoop Distributed File System (HDFS)

- Amazon S3

- Azure Blob Storage

- Google Cloud Storage

DBFS acts as an interface to these types of systems, providing a unified, simple access layer.

Local File Systems

A local file system refers to the file system of a local machine or a Databricks cluster in this context.

Files in a local file system of a Databricks cluster are typically only accessible from that cluster and are lost when the cluster is terminated.

DBFS provides persistence beyond the lifecycle of a cluster, as data stored in DBFS remains intact even after a cluster is terminated.

How To Access And Browse DBFS In The Web Inteferace

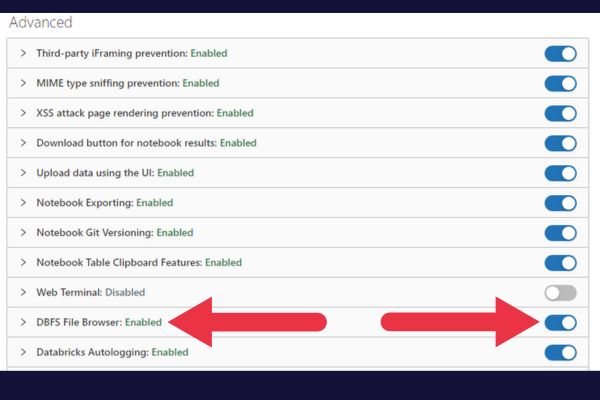

Databricks provides a web-based user interface where you can access and browse DBFS. This feature can be enabled or disabled by the workspace admin user.

If you’re using the Community Edition, it’s disabled by default. To enable it, follow these steps:

- Click the settings gearbox in the left pane (down at the bottom)

- Go to the Workspace Settings tab.

- Scroll down to the Advanced section.

- Enable “DBFS File Browser”.

- Refresh the page.

When the browser is enabled, you can access it with these steps:

- Chose the Data tab on the sidebar of your Databricks workspace

- Click on the “DBFS” button at the top of the page.

How To Get A List Of Files In DBFS In A Notebook

If you’re working within a Databricks notebook, you can use the dbutils.fs module to list the contents of a directory in DBFS.

Here’s an example that lists the contents of the root directory:

display(dbutils.fs.ls("/"))If you’re just getting started, check out our beginner tutorial on using Databricks notebooks.

How To List DBFS Files Using The Rest API

The DBFS API offers a programmatic interface to DBFS. The API consists of a set of operations that can be used to manage files and directories in DBFS.

The DBFS API is a RESTful API that uses standard HTTP methods like GET, PUT, and DELETE to interact with files and directories in DBFS.

This example lists files in the root directory using a curl command:

curl https://<databricks-url>/api/2.0/dbfs/list -d '{

"path": "/"

}'How To List DBFS Files Using The Databricks CLI

When you install the Databricks CLI on your local machine, you can use it to list files in a directory in DBFS.

Here’s an example:

databricks fs ls dbfs:/